The following deepfake artifacts are from an assignment for the NYU ITP Faking the News fall 2018 course. We created the artifacts using FakeApp 1.1 and our own facial training data programmatically extracted from video clips. The assignment's purpose was to understand how deepfakes and the neural networks behind it work and whether this synthetic media could be deployed for fake news purposes. I picked Jim Cramer and Jason Statham with similar facial structures to minimize the visual discrepancies, but known for different types of personas to highlight the uncanniness between the original and synthetic media.

One of the deepfake exercise takeaways for me is how accessible these deepfake tools are with GUI applications such as FakeApp and online tutorials on how to extract training face data. This could make it easier for malicious and unethical actors to generate fake news or personally attacking content. My Faking the News classmates and I, however, felt confident back in 2018 that deepfakes could be detected given inconsistencies in the synthetic media (e.g. glasses and facial hair were hard to swap).

Jim Cramer on Jason Statham (from Spy)

Jason Statham on Jim Cramer (from The Daily Show with Jon Stewart)

Tools

- FakeApp 1.1

- face_recognition

- autocrop

- youtube-dl

- videogrep

- ffmpeg

How it works

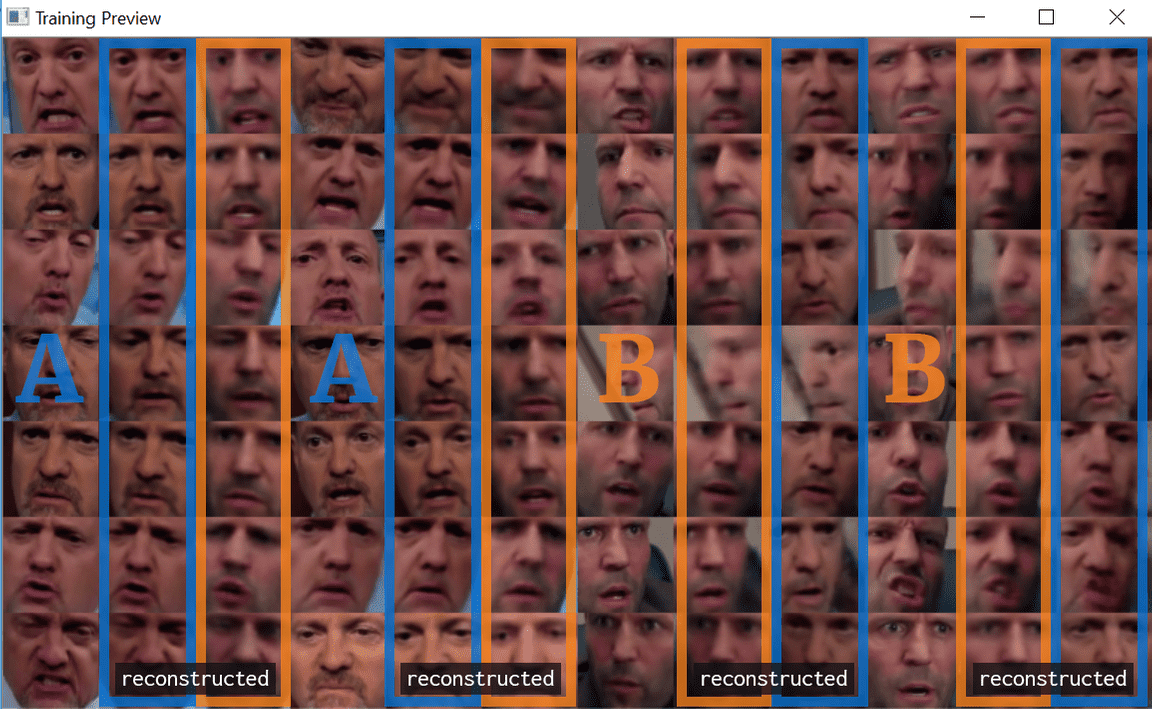

The neural network is fed cropped face images of two people and trained to reconstruct that face through deep learning. Each neural network attempting to recreate (synthesize a face) from its input (the original face image) is known as an autoencoder. Deepfakes takes two autoencoders trained together and swaps the outputs.